SEATTLE – Rufus, the app-based AI shopping assistant introduced by Amazon last year, is now augmented with an object-detection model that identifies items visible in the camera of a user’s phone and delivers real-time data related to the items, including matching products on Amazon’s marketplace.

The company has introduced Lens Live as an upgrade of the Amazon Shopping app to allow browsing for items by pointing a camera at them. The Lens Live feature is initially launching on the Amazon Shopping app on iOS.

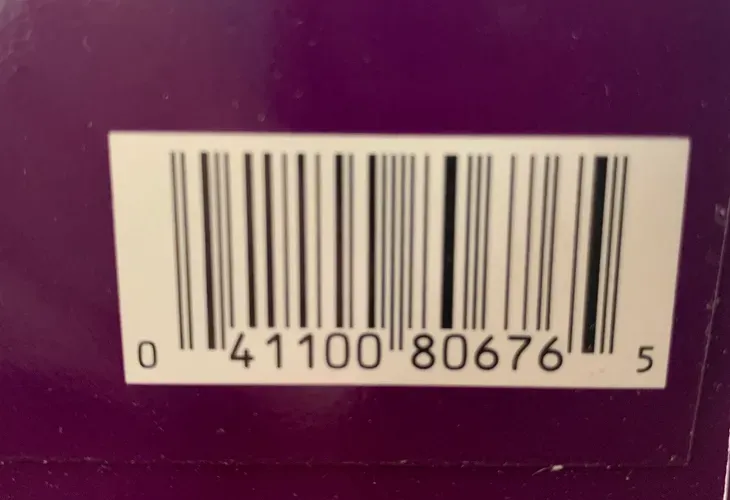

Lens Live will not replace Amazon’s existing visual search tool, Amazon Lens, which lets users take a picture, upload an image, or scan a barcode to discover products. Instead, it brings a real-time component to Amazon Lens that allows users to point their phone at objects to retrieve product details as well as potential substitutes.

Users see the see top-matching items in a carousel, swipe across the options, compare them, and add them to the cart directly from the same view. If there are multiple objects in the camera view, the desired item can be isolated with the tap of a finger.

Lens Live uses a deep-learning visual embedding model to match the customer’s view against billions of Amazon products, according to an Amazon spokesman, who noted that the Amazon Lens widget is used by tens of millions of customers each month to find what they’re looking for quickly and easily.

In making Rufus available in the new experience, Amazon allows users to see AI-generated product summaries and suggested questions of conversational prompts they can ask to learn more about the item. The company said this permits shoppers to research items and access product insights before deciding to buy.