By Jon Nordmark, CEO and co-founder of Iterate.ai

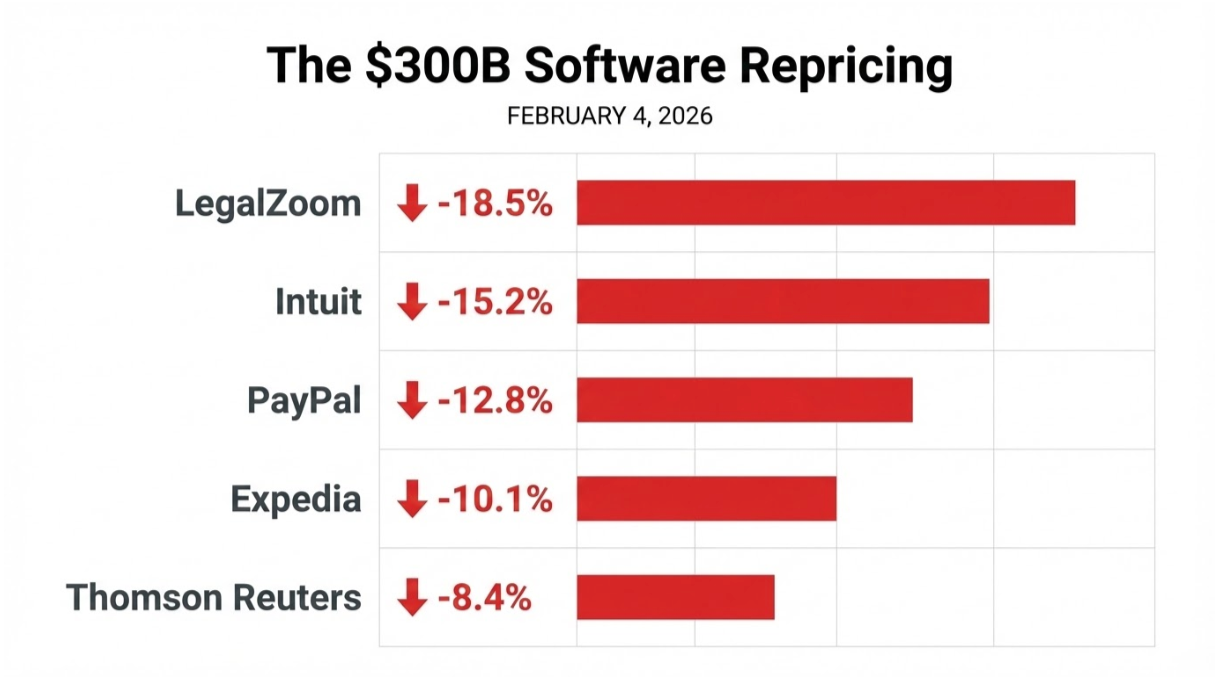

You may have seen this week’s The Wall Street Journal article: “Threat of New AI Tools Wipes $300 Billion Off Software and Data Stocks.” LegalZoom, Intuit, PayPal, Expedia, Thomson Reuters — and even private-equity firms heavily exposed to software — were all hit hard.

The trigger: Anthropic announced legal AI plugins for Claude Cowork that can automate contract review, NDA triage, and compliance workflows—threatening legal tech first, then raising questions about all workflow-heavy software.

The same day, Walmart crossed $1 trillion in market cap—the first traditional retailer to reach that milestone—powered by AI adoption, e-commerce growth, and automation investments.

This selloff wasn’t a rejection of AI. It was a repricing of where value actually lives in the emerging software stack.

And as AI systems become more autonomous, governance and control will increasingly become an integral part of the value stack.enabling Private AI

The market hasn’t fully made this distinction yet — but it will. Over time, value will concentrate in the ability to run private AI and govern AI systems end-to-end.

Here’s why Iterate sits on the right side of that shift.

1. Almost everything we build is built on Interplay

Interplay was designed to be modular and composable from day one.

Our AI-first products like Generate, Frontline, and Extract are built on it. Almost everything we build as custom AI for clients is as well.

That foundation gives enterprises the ability to:

- swap LLMs without rewriting applications

- move inference between cloud, on-prem, or edge

- fine-tune models — and in many cases own their LLMs

- control exactly how data moves, is stored, and is accessed

This isn’t a roadmap item. It’s native to the platform.

Examples we see in practice:

- swapping from a shared public LLM to a private, fine-tuned model

- running inference locally or air-gapped for regulated teams

- using different models for different agents in the same workflow

These capabilities matter more now that switching costs are collapsing.

2. That foundation lets us build applications that are intentionally replaceable

We are full-stack, and we do offer applications — but they’re designed to be updated fast.

We build them with AgentOne, our enterprise-grade automated coding platform.

AgentOne can stand alone or integrate directly with Interplay, where generated code becomes part of a governed, visual, drag-and-drop system for orchestration, runtime, and deployment.

Think tools like Lovable or Cursor — but with built-in security, architectural guardrails, governance, very large context windows, and the ability to reason across hundreds or thousands of files at once, with production-ready deployment from day one.

To show prospective clients what’s possible, we often build applications live in the first meeting. It takes minutes to stand up a working prototype — not weeks.

We don’t sell screens and seats. We sell the engine room.

AI can bypass workflows, forms, rules engines, and UI-heavy software. Look at what got hit on Tuesday:

- LegalZoom: document assembly workflows

- Intuit: tax preparation logic

- PayPal: checkout and payment flows

- Expedia Group: booking workflows

- Thomson Reuters: legal research interfaces

Anything that looks like “AI sitting on top of a shared model” suddenly feels exposed.

The durable value sits underneath — in the runtime and in the modularity. That’s what enables rapid change, fast experimentation, and continuous improvement against real business outcomes.

3. The selloff was broad — but the long-term disruption won’t be evenly distributed

The selloff swept across legal, tax, payments, travel, and research software—different industries, same vulnerability: interaction logic and workflow automation.

Even large systems of record — ERP, HR, finance, and legal platforms like SAP, Oracle, Workday, FIS, Thomson Reuters, and LexisNexis — are now being discussed as facing disruption risk from AI.

But not all layers of the stack face the same risk.

AI can replace clerical workflows. Enterprises still need plumbing they can trust.

Public AI APIs are widely accessible — but private AI is where Iterate excels and is uniquely differentiated.

For privacy, security, and governance reasons, boards and C-suites are waking up to the need for AI that runs:

- on enterprise-owned hardware

- behind firewalls or air-gapped

- under explicit operational control

We write code close to the metal. That means managing AI at the chip level — memory, compute, and networking — which is required for efficient, scalable AI processing.

This is how we’ve helped enterprises cut AI processing costs by up to 95%, while improving latency and performance.

4. “Sticky software” just stopped looking sticky

AI breaks the assumption that switching enterprise software is too hard.

Enterprises are now asking:

- Can we swap models without re-platforming?

- Can we move inference as costs or regulation change?

- Can we control data paths — and own our models instead of renting them?

Those are Iterate-native questions.

Interplay enables safe switching. Generate lets teams act on it quickly. And we also build custom AI capabilities — fast and affordably — when needed.

5. Applications are expressions of the engine — not the moat

Applications are how value shows up — not where it’s locked in.

For hospital CFOs, we built five AI agents that:

- read payer contracts

- analyze unpaid insurance claims

- compare claims to contract terms

- determine what should have been paid

- draft resubmission letters

That five-step process was humanly impossible before AI. An average hospital has 110,000 unpaid claims per year. We can now process them every day — in just a few minutes.

Across the U.S. hospital system (excluding physician practices), this represents an estimated $180 billion annual black hole.

Our first hospital client — a $200M hospital — identified $17.4 million in resubmittable claims.

That outcome required a new architecture — one where agents can be composed, modified, and improved continuously, and run behind firewalls for HIPAA compliance.

We use the same architecture for computer vision models operating in European gas stations — enabling license-plate-based payments while complying with GDPR requirements.

6. Our moat is exactly where durable value is forming

Orchestration. Runtime. Deployment. Control.

This is where our defensibility lives.

We currently have 8 patents granted, 14 pending, and a dozen more in development, many embedded directly in Interplay and AgentOne.

These patents focus on hard problems that don’t commoditize easily, including:

- multi-threaded AI execution and runtime optimization

- drag-and-drop LLM orchestration and model mobility

- modular AI container architectures that allow agents and models to be composed, swapped, and governed independently

A few concrete examples:

- US-12430556 — Large Language Modules in Modular Programming (drag-and-drop LLM orchestration)

- US-11803335 — Data Pipeline and Access Across Multiple Machine-Learned Models

- US-10929181 — Developer-Independent Resource-Based Multithreading Module

We don’t fight commoditization. We architect around it.

7. Governance is not optional — and it’s coming fast

We’re deeply focused on governance because of what’s coming next.

Former OpenAI researchers have outlined credible scenarios in papers like AI-2027 and Situational Awareness, describing a world of increasingly autonomous, fast-moving agents operating beyond human-scale oversight.

We take that seriously. And enterprise board members, CISOs and corporate officers should too.

That’s why we built AgentWatch.

AgentWatch provides real-time visibility, monitoring, and control over AI agents — including what they access, what actions they take, and how they behave over time.

It can be deployed:

- in private data centers

- on-prem

- or alongside air-gapped environments

AgentWatch isn’t theoretical governance. It’s operational governance — designed for the AI systems enterprises are already running, and the more powerful ones that are coming.

Final thoughts

Some software stocks may bounce in the short term.

But AI is more like electricity than software — it will rewrite operating assumptions, cost structures, and long-standing business models.

This selloff isn’t about AI killing software. It’s about old-fashioned abstractions collapsing.

The market will eventually distinguish between fragile application layers and durable, governed infrastructure.

When it does, Iterate is positioned exactly where enterprise value is forming — below the abstraction layer that’s breaking.

Iterate.ai enables enterprises to deploy and govern AI at a fraction of hyperscaler costs — with full optionality and control — whether the enterprise is global or a mid-market leader. No lock-in to AI models. No shared GPU dependency.

Submit Your Press Release

Have news to share? Send us your press releases and announcements.

Send Press Release